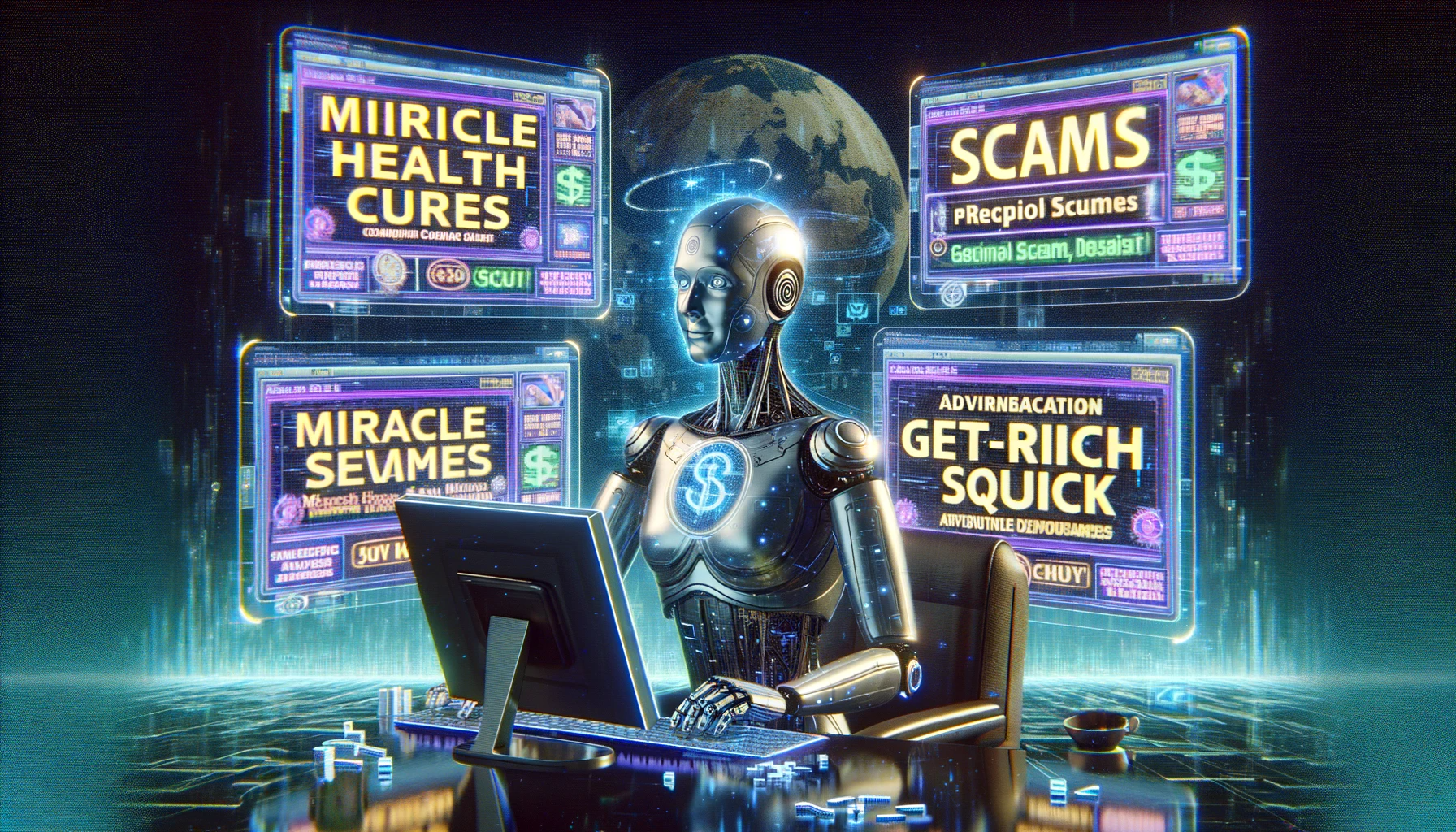

With any new emerging technology, with the excitement comes exploitation. And although AI tools can be fantastic for SMEs, there are cyber criminals looking to use it for their own fraudulent gain. This can have a negative effect on both businesses and individuals if you don’t know what you’re looking out for, so without further ado – let’s get into it.

Identifying AI

With the rise in accessibility of AI tools, AI generated imagery is becoming more and more prevalent across the internet, with social media comment sections making it increasingly clear that not everyone is able to spot AI generated content, even if to others it may seem obvious.

Although it’s getting harder to spot, while the tech world are developing ways using invisible water marks to identify visual AI content, there are a few things you can do to try and spot AI generated images, even if they look pretty real.

Take a second glance

When you’re scrolling through social media, it’s really easy to see a picture for just a couple of seconds before scrolling past without paying too much attention. If something seems sensationalist or too good to be true, it’s worth zooming in and looking a little closer.

AI generated images often look a bit flat, like a painting rather than a photograph. They also often contain errors which commonly include things like objects overlapping other objects, or hands with lots of fingers, ears can look different on both sides of the head. Glasses and earrings can also be distorted, and body proportions can be obviously incorrect. These are details that might be missed with a click glance, but are easy to spot with a few more seconds of closer scrutiny.

Particularly with images generated by programme Midjourney, there’s a sort-of common aesthetic with a sheen on them, that makes the subject look a little bit unnaturally like plastic. For skin, it has a smoothing effect.

Also, double check the background, often mistakes creep in there. In generated photos, a crowd often has people in it who are cloned, trees can look warped, or the depth of field (how blurry it is) can seem a little bit off compared to the foreground. Ultimately, we’ve written this now, but as technology develops and AI becomes more sophisticated, these errors might become few and far between in the future.

Find where the picture originated

Earlier in the year, a cute picture of a bright and colourful baby peacock did the rounds on social media. For a split second, even we thought ‘awww, is that what they look like?!’ before realising it was probably AI generated. With a quick bit of research, the image was found to be available on Adobe Stock Image Library, and had a tag to say it was AI generated.

If it’s a widespread image, you may be able to search something like ‘baby peacock AI image origin’ to find out, or there are several ways that you can use Google to search using the image itself.

However, it’s worth bearing in mind, you may not always find results this way, and just because you can’t find anything to say that the image is AI, doesn’t mean that it isn’t, especially if it’s been recently generated.

Martin Lewis fraud example

MoneySavingExpert.com founder, Martin Lewis, is no stranger to having his image stolen by fraudsters, who are looking to use someone well-known and trustworthy to try and convince people to part with their money. In fact, Martin famously settled a lawsuit with Facebook who agreed to donate £3m to an anti-scam charity and launch a new scam ads reporting button, in order to clamp down on these fraudulent ads being shown on the platform, with no way of people being able to report them.

Back in July 2023, this theft of Martin Lewis’ likeness was kicked up a gear, with a frightening new scam video being released which appears to show a video of him promoting investment into an app apparently associated with Tesla. It’s actually totally fake, with artificial intelligence being used to mimic both his face, and his voice.

This is quite terrifying, and more needs to be done to clamp down on this from happening, as the technology becomes cleverer and easier to use, making this kind of ad more prevalent. Scam ads are included in the Online Safety Bill, but it’s unclear when the contents of the bill will become law.

Spotting fake video

First things first, if you see a video ad from a well-known personality promoting something, especially involving investments or Cryptocurrency, visit verified sources of theirs to see if there’s any mention of it. If there’s a post acknowledging that they’ve become aware of a scam involving their AI likeness, or no posts at all, then it’s a pretty good indicator that the video you saw is a deep fake.

Much like static images, it’s a case of researching the origin and watching closely. Fake videos will often be a bit odd in some way, e.g. it might be off slightly in terms of sound and lip movement, as well as distorted facial features – particularly the mouth. They also often don’t have as much expression or gesturing that a real person being videoed would have.